1) You are doing it all wrong!

What is your success metric? What is your hypothesis? What are your KPIs?

These are inherently flawed questions. Let me tell you why:

These types of questions would be relevant if we had done the traditional hypothesis brainstorming methods to come up with our testing backlog. But, as I’ve pointed out before, having a hypothesis means that you have already come up with a solution, and furthermore, it biases you and others towards that hypothesis because you are incented to prove it right.

Instead, brainstorming ideas and questions is more innovation-friendly and creates openness to really evaluate and understand the results and then be free to act on them appropriately.

2) Improve VALUE not METRICS.

Let’s play this out. What if we don’t have a hypothesis. What if we say: “we have the data and have done the test scoping to believe that this will have a net positive impact on the company”.

Now imagine how you would analyze the results…

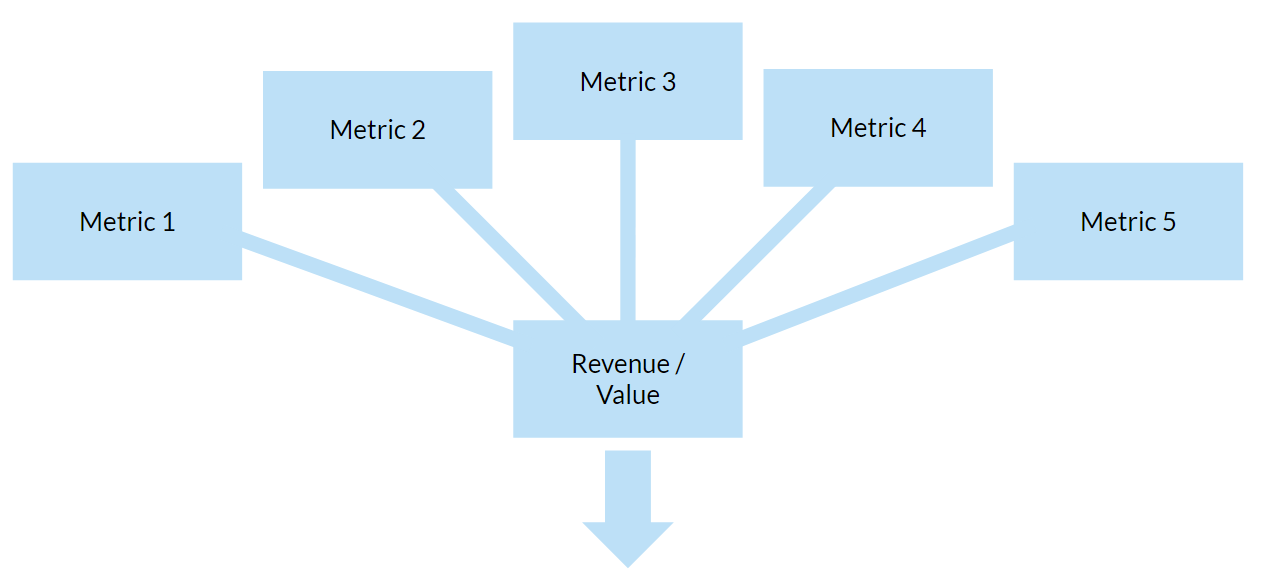

You likely aren’t imagining whether the hypothesis is right or wrong are you. Likely you are evaluating a lot of metrics holistically, thinking about their impact on the company, and for that matter, thinking about what defines a “net positive impact on the company”.

And here is the kicker – when you look at the metrics holistically you discover hidden user behavior gems that help you refine your product and generate new ideas that you wouldn’t have found if you were focused on specific success metrics.

These are the relevant questions you should be asking, because they are the questions that are actually important.

3) False: Mo’ money, mo’ problems.

You may or may not also be thinking that this is a less data-driven approach. But that is where you’d be wrong, it requires you to actually be more data-driven. The key is in defining what a net positive impact to the company is. At the end of the day, and especially if you’ve read “The Goal” by Goldratt, you will understand that the purpose of a company is to make money, or more broadly speaking, to increase the value of the company.

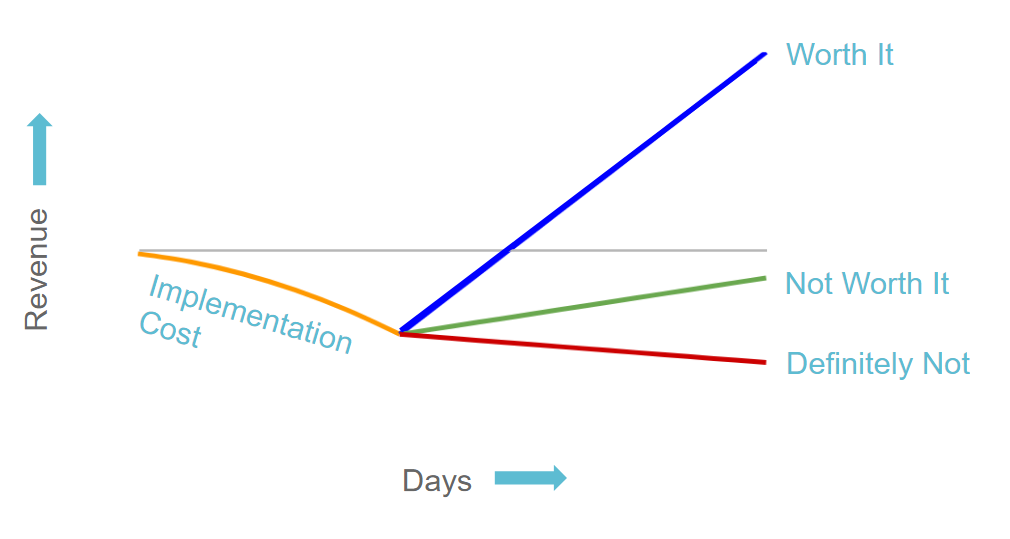

You do have a hypothesis, and that hypothesis is always that – this will create significantly more value than it will cost.

So, with that in mind, your questions about the test results shouldn’t be “what impacts did it have to my success metric?” or “was the hypothesis proven wrong or right?”, instead, they should be “what were the positive and negative impacts across all of the key metrics?” and “what would the resulting impact to revenue be if we implemented it?”.

Also note how this makes the question of what to test and what to implement much simpler. It is not whether it impacts this metric or that one, it is how much can we improve our value at what cost. So when you are evaluating two tests against each other or two product features it is a matter of value at cost.

4) Don’t buy low-value at a high price.

Now your choice is straightforward, you wouldn’t walk into a store with two items in the same category sitting next to each other and buy one that is priced way higher than the other now would you.

Why don’t people do this?

I’m not sure to be honest, but my best guess is 1) That it requires effort and is not the easiest path because you actually have to understand and map the relationships your KPIs have with revenue and 2) People have a hard time quantifying what is not easily quantifiable, say “time on the app” – it’s not that it’s not quantifiable in terms of revenue, it is just that you haven’t spent the time and brainpower to figure out how to quantify it.

What is that classic manager saying again? “Don’t bring me excuses, bring me answers!”? Something like that.

So if you have quantified your tests and results in terms of revenue, the go-forward decision is straightforward: will this make us more money than it costs us? Otherwise, it obviously wouldn’t make sense. And here we are talking about engineering hours and any other associated costs.

The second piece is whether it makes enough revenue at that cost that it is worth our while. For this, I generally defer to the 5% aggregate minimum impact to a core KPI rule of thumb for early stage, but it really varies by company and stage.

While the go-forward / no go-forward decision is largely situation-specific, in my next post I will dive into some considerations which define and align decision tradeoffs to optimize for speed. Things like Airbnb’s “dynamic decision boundary” and key benefits, costs, and risks that should be accounted for with every decision.