Playing a game of blind darts.

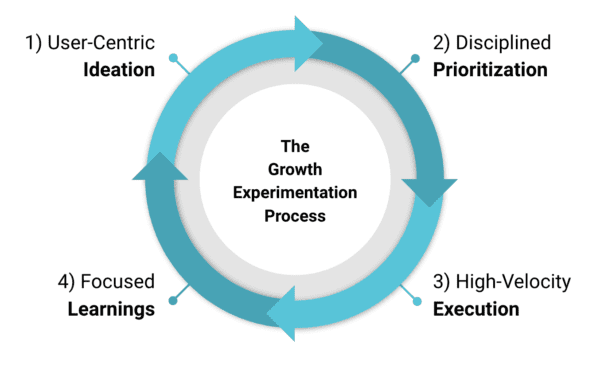

Ok so you have a bunch of ideas that you think will help grow the company, inspire user engagement, reduce friction, or impact whatever it is that your company growth goals might be. How do you determine what you should test and validate first?

In an ideal world you can actually calculate the estimated impact to KPIs along with the estimated engineering effort it would take to implement the solution should the test be successful, and sort rank from there.

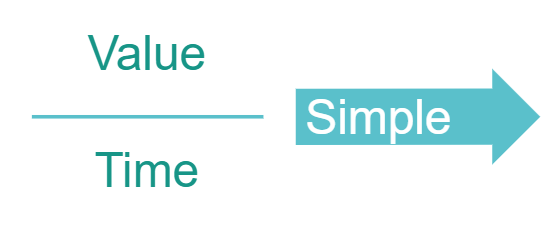

Unfortunately, that is not always the case, but the same approach still holds true. Remember the equation that we are trying to optimize for at a high level: achieving the most value, in the shortest time, as simply as possible. In this stage (test scoping & prioritization), we are optimizing our process for time:

Have a destination.

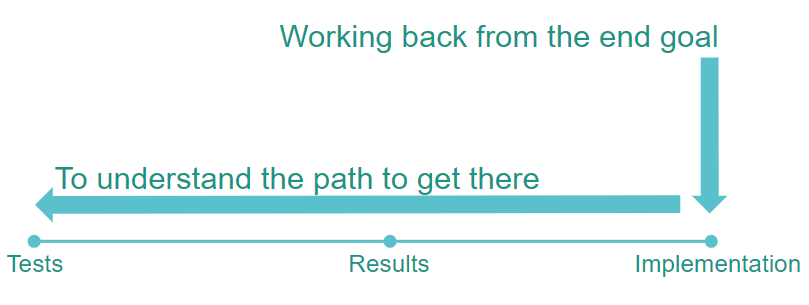

The first thing that people tend to miss when determining what to test is considering what solution would be implemented if the test was successful, and what level of effort would come along with implementing that solution.

What about any risks as well?

It’s easy to think of ideas without really reflecting on what the long-term implications might be. Thinking from multiple angles and time-horizons on a single subject takes brain horsepower and effort.

If you test without considering this, you are flying in the dark as to what type of results and confidence level you would need to make a go / no-go decision on implementing it as well.

What if what you are testing doesn’t have a real long-term sustainable solution that you could implement EVEN if the test was successful? If you haven’t thought through that, well, then you are in some shit.

I think of it like this:

Then pick the most efficient path to get there.

So what do we need to consider anyway? If I came to a product manager or the engineering team and said, “hey we should implement this” without having thought about what that entails, damn would I feel stupid. It is your job in growth to have thought through these things and tee it up for other departments to execute on.

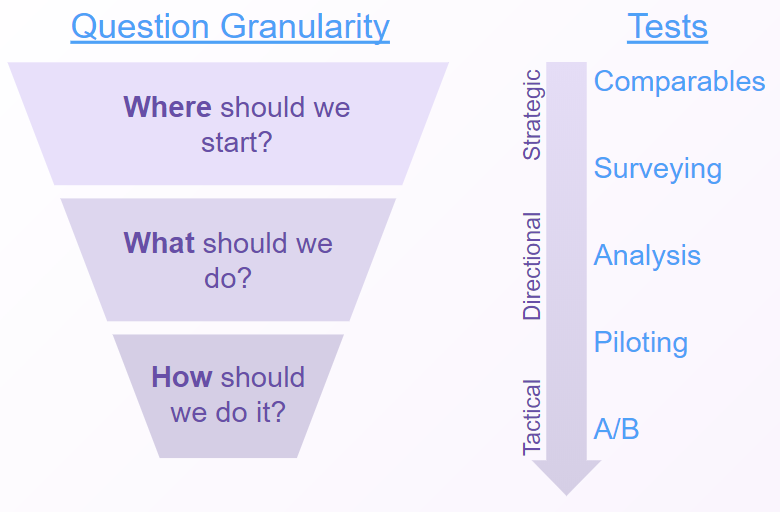

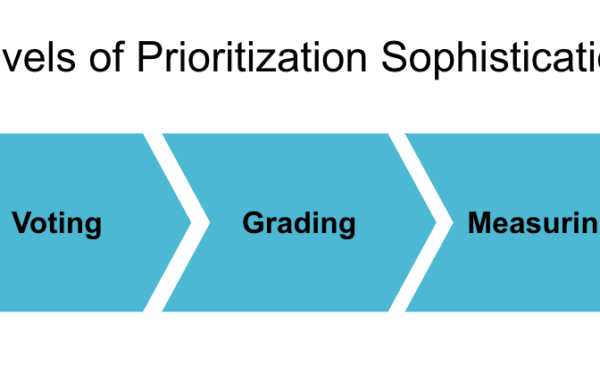

I will talk about how we evaluate and prioritize ideas before testing them, but first, let me dispel the myth that all testing is done by A/B or multivariate experiments.

Not true.

In fact, if your product is in its early stages (less than ~2 years since launch) and you are doing A/B testing of a feature or acquisition channel, you have probably skipped a few stages. Recognize that A/B testing is typically an approach for optimizing something that is already proven, so by definition, if you are A/B testing a product feature you are making the assumption that the existing solution is proven, when in most cases within the first 2 years, it’s not.

The end goal is to determine how we can achieve the growth goals in the shortest time, so we need to get answers that help us decide how to get there. There are many ways to get answers, and furthermore, certain approaches are better suited or faster to get to answers than others depending on what answers and confidence we are seeking.

Here is a framework I think about:

When a company is in its early stages, many of the questions are strategic in nature, about where should we start, where is there an opportunity. As you progress and iterate, you learn where there are opportunities, what you should do to tackle them, and lastly how you should do it to achieve the maximum results. I’ve laid out 5 of the most common ways that I use to get to answers roughly ordered by granularity, but it really depends on your specific use case.

One of the most worthwhile exercises before settling on a test method is to try to see if you can walk upwards on this funnel. Similar to the benefit behind the “5 Whys” method, we often make assumptions that aren’t proven, so by challenging ourselves to validate those higher assumptions or less granular tests, you naturally simplify your approach and end up gaining additional speed.

It will have been time well spent, trust me.

Possibly one of the best uses of time: evaluating prioritization.

We have an estimated impact to company growth goals, we know how we plan to test it, how do we evaluate where our efforts will be best spent from there?

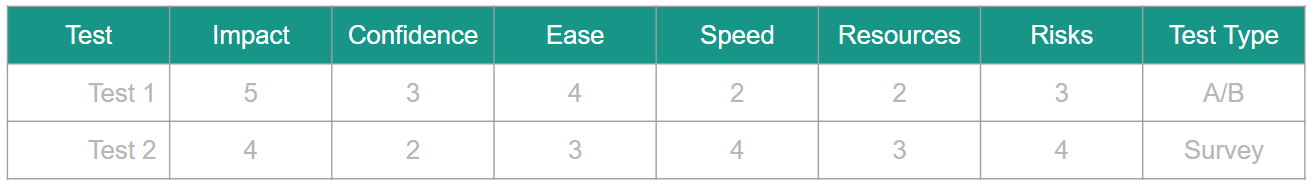

You might have heard of the classic “I.C.E.” scoring method, which stands for Impact, Confidence, and Ease.

The thing is, the ICE method falls short because it is just considering the testing side of the equation and not the implementation. I propose a new acronym: ICESRR. Impact, Confidence, Ease, Speed, Resources, and Risks.

You have impact to company growth KPIs. The ease (the antonym of difficulty) of executing a test. Speed is how long you estimate it will take to reach results on the test. Resources is how many resource-hours it would take to implement if successful. Risks covers any potential downsides or unknown effects that the solution might have if implemented. And lastly, confidence is how sure you are in the scores you gave.

Additionally, we give each scoring factor a different weighting – how important is each to your org and decision making? It varies depending on your culture and by stage of development of the company.

Then you make a calculation multiplying each score by their weighting, adding them together to come out with a combined “value score” which gives you a way to fairly compare and sort rank tests against each other and determine which would be most valuable to prioritize first.

Ideally, the scoring of these factors is derived from hard data and analysis. For instance, the impact score is calculated from an estimation of impact to a growth goal using the exposed audience size multiplied by the estimated KPI lift as discussed in the last post. The effort is determined by an engineering hours estimate of the solution that would be implemented.

When your growth process becomes more developed, you should have the KPIs and their associated impact on revenue modeled out. With some basic assumptions and estimations you can boil this down to a simple ratio of revenue / engineering hours.

Think differently, think faster.

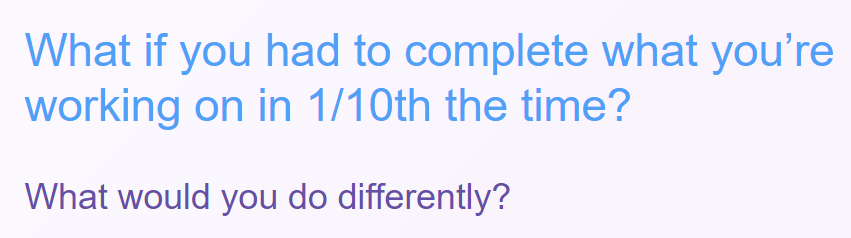

In the next post, I will discuss how you can execute scoped tests quickly, and thought processes for keeping the implementation of successful test simple. Remember from the last post about test ideation, during test scoping and prioritization, the primary objective we are optimizing for is speed. And so I leave you with this thought: